In January 22, 2017, a 14 year old girl in foster care from Miami, Florida livestreamed her suicide on Facebook live, wherein hanged herself in the bathroom of her foster parents’ home. The stream started at around 3am and played out for about 2 hours. The authorities were shocked by this incident her biological and foster parents were devastated by the incident.

Suicide and self-harm is a serious issue worldwide, especially with teenagers. And in today’s age of the internet and social media, even the most subtle posts about hurt and pain could hint out to something larger and much more dangerous; and in light of recent events, Facebook has now upgraded their AI algorithm to check for warning signs of suicidal behaviour in a user’s posts, comments, and livestreams.

Facebook’s human review team has confirmed that they contact those who show warning signs and are at risk of harming themselves and offer them ways this could help. “This move is not only helpful but also critical” says a suicide hotline chief.

This is not the first time that Facebook has helped those who are at risk of self-harm though. For years, it has helped suicidal users by offering them advice and resources, but they were only able to do this if and only if other users flag the post for them, and by then it could be too late. Now, Facebook uses its AI system to detect these signals and help the users as soon as possible.

Source: iDownloadBlog.com

They do this via pattern recognition. The Facebook AI uses previous posts flagged with suicidal behaviour as a reference, and then scans a user’s posts for warning signs that fit those patterns. Posts about self-hatred, depression, and pain could be one signal, and responses to those posts such as “Are you okay?” and “I’m really worried about you”, could be another signal. After these are detected, they are then sent for rapid review to the Facebook community operations team. They can then contact the user and offer help and contact their friends and family members as well.

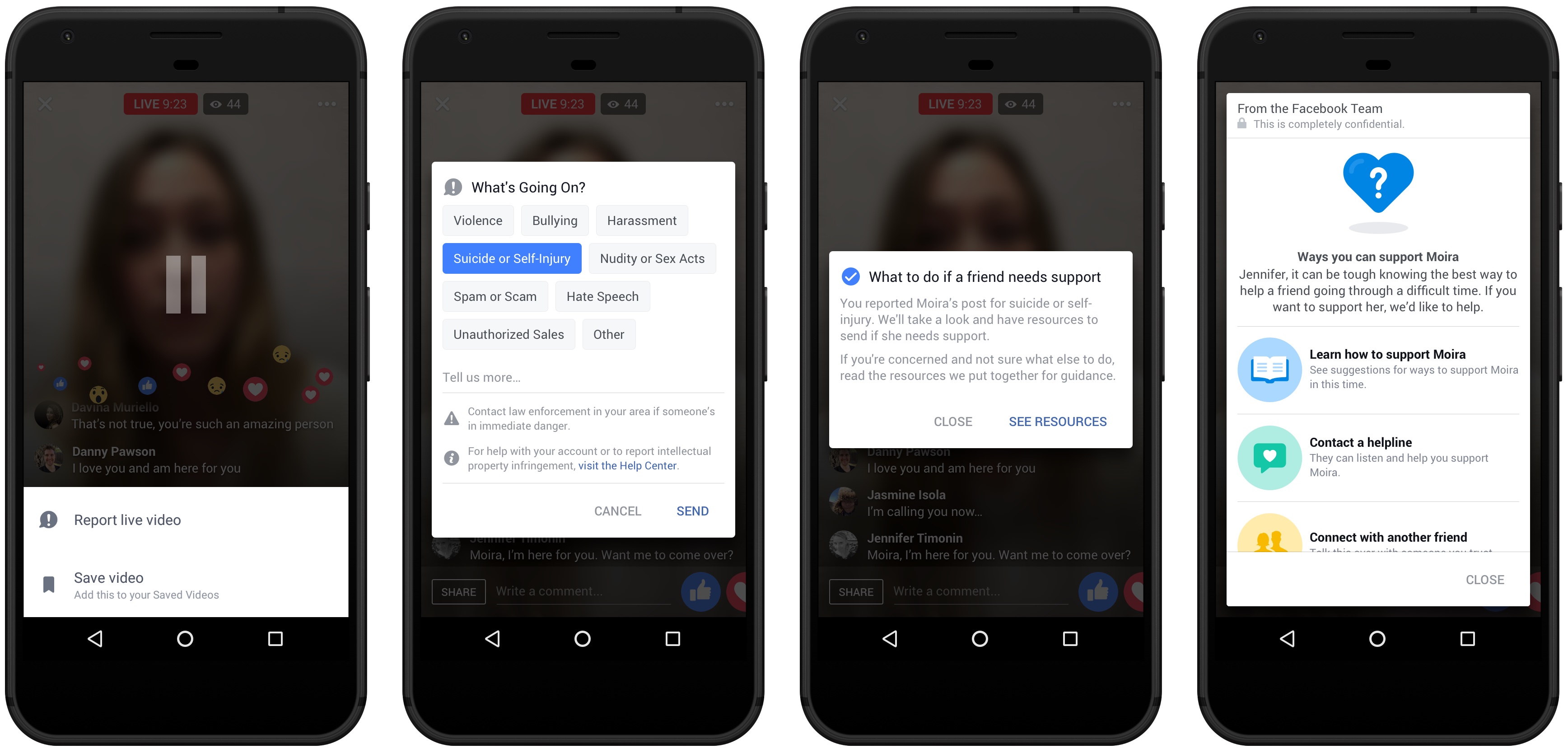

And it doesn’t end there. Facebook Live has launched a tool in which concerned streamers can click on a menu option to declare that the broadcaster needs help, and then offer advice to the streamers on how they can help them. The Live video is also flagged by the Facebook team, in which they can overlay their own suggestions and offer help when needed.

“This opens up the ability for friends and family to reach out to a person in distress at the time they may really need it the most.” says Jennifer Guadagno, the lead researcher of the project.

“Their ongoing and future efforts give me great hope for saving more lives globally from the tragedy of suicide,” adds Dr. Dan Reiden, the executive director of Save.org, who is also involved in the project.

Article Sources: